Deep Learning for Image Denoising: A Photographer's Guide

Understanding Image Noise and Its Impact on Photography

Did you know that the pursuit of the perfect, noise-free image has driven technological innovation in photography for decades? Image noise can be a photographer's biggest frustration, turning a potentially stunning shot into a grainy, distracting mess. Let's dive into understanding image noise and its impact on your photography.

Image noise refers to those random variations in brightness or color that can appear as unwanted specks or patterns in your photos. Think of it as the visual equivalent of static on a radio. Several types of noise exist, each with unique characteristics.

- Gaussian noise is a common type that follows a normal distribution, affecting each pixel with a random value. In a photograph, it appears as random variations in brightness and color, giving the image a grainy or speckled look.

- Salt & Pepper noise manifests as random white and black pixels scattered across the image. You'll see isolated black and white dots that stand out starkly against the surrounding pixels.

- Poisson noise is signal-dependent, meaning it increases with the intensity of light. This type of noise is often seen in low-light or high-ISO shots where the signal itself is weak, and it tends to be more noticeable in brighter areas of the image.

- Speckle noise is often found in radar and ultrasound images, creating a granular texture. While less common in standard photography, it can sometimes manifest as a subtle, granular pattern in very specific imaging scenarios or as an artifact of certain processing techniques.

Regardless of the type, noise negatively impacts image quality, leading to a loss of detail, reduced sharpness, and distracting artifacts.

Several factors contribute to image noise, and understanding them can help you minimize its effects.

- Sensor size and ISO settings are critical. Smaller sensors and higher ISO settings increase sensitivity, but introduce more noise. Pushing your camera's ISO higher, especially on smaller sensors, is a surefire way to get more grain.

- Long exposure photography can also be a culprit. Extended exposure times generate heat in the sensor, leading to increased noise. This is why you often see noise reduction options for long exposures in your camera.

- Environmental factors like low light conditions and high temperatures can exacerbate the problem. Shooting in a dark room or a hot environment? Expect more noise.

Denoising is essential for photographers aiming to achieve professional-quality results.

- It helps in preserving image details and sharpness, balancing noise reduction with detail retention. It's all about finding that sweet spot where the noise is gone but your subject's fine features aren't.

- Denoising improves image aesthetics and achieving clean, visually appealing photographs. Nobody likes a noisy portrait!

- It enhances post-processing flexibility, denoising is a crucial step in the editing workflow that provides more latitude for creative adjustments. You can push your edits further when you start with a cleaner image.

As raver119 explains, image denoising is a viable computer vision problem with more than one solution, making it a fascinating area to explore with neural networks.

Understanding the impact of image noise sets the stage for exploring how deep learning can provide effective solutions. Next, we'll examine traditional denoising techniques and their limitations.

Traditional Image Denoising Techniques: Limitations and Challenges

Did you know that some of the earliest attempts at image denoising date back to the mid-20th century? While our tools have evolved, the core challenge remains: how do we eliminate noise without sacrificing crucial image details? Traditional image denoising techniques have been the cornerstone of photography for decades, but they come with their own set of limitations and challenges.

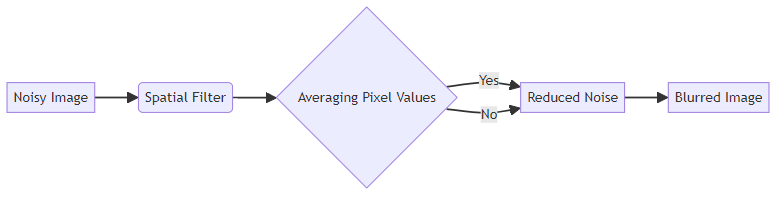

Spatial domain filtering is one of the most intuitive approaches to image denoising. These filters operate directly on the pixels of an image, modifying their values based on the values of neighboring pixels. Common spatial domain filters include:

- Mean Filter: This filter replaces each pixel's value with the average value of its surrounding pixels. While effective at reducing noise, it tends to blur image details. It blurs details because by averaging pixel values, it smooths out sharp edges and fine textures, essentially averaging out the good with the bad.

- Median Filter: Instead of averaging, the median filter replaces each pixel with the median value of its neighbors. This is particularly effective at removing salt-and-pepper noise while preserving edges better than the mean filter. It works well because it picks a value that's actually present in the neighborhood, making it less prone to creating artificial blur.

- Gaussian Filter: This filter uses a weighted average, with closer pixels having a greater influence. It's useful for reducing Gaussian noise but can still blur fine details. Similar to the mean filter, the Gaussian filter causes blurring because the weighted averaging process smooths out sharp transitions and fine textures.

The primary limitation of spatial domain filters is their tendency to blur image details. Averaging pixel values inevitably smooths out sharp edges and fine textures, resulting in a loss of image sharpness.

Transform domain filtering takes a different approach by transforming the image into a different domain, such as the frequency domain, before applying noise reduction. Two popular transform domain methods include:

- Wavelet Transform: This method decomposes the image into different frequency components. Noise is often concentrated in high-frequency components, which can be selectively suppressed.

- Fourier Transform: Similar to wavelet transform, the Fourier transform represents the image in terms of its frequency components. This allows for targeted noise reduction in specific frequency bands.

While transform domain methods can be more effective at preserving image details, they also come with their own limitations. One significant challenge is the computational complexity of these methods. They can be quite slow to process, especially on large images.

Variational methods use mathematical models to estimate the clean image by minimizing an energy function. A well-known example is Total Variation (TV) minimization, which seeks to reduce the total variation in the image while maintaining fidelity to the original noisy data. In essence, 'total variation' for an image refers to the sum of the magnitudes of the gradients across the image. Minimizing it encourages regions of constant color or intensity, which helps to smooth out noise while ideally preserving sharp edges.

However, variational methods are not without their challenges. These methods often require careful parameter tuning to achieve optimal results. Finding the right balance between noise reduction and detail preservation can be tricky.

Traditional denoising techniques have paved the way for more advanced methods. Next up, we'll explore how deep learning is revolutionizing image denoising, offering new possibilities for photographers and image processing professionals.

Deep Learning for Image Denoising: A New Era

Is it possible to teach a computer to "see" past the noise and reveal the true image hidden underneath? Deep learning is doing just that, offering a new era in image denoising that promises to revolutionize how photographers and other professionals handle image quality.

The core idea behind using deep learning for image denoising is its remarkable ability to learn complex patterns directly from data. This capability makes it exceptionally well-suited for a problem like image denoising, where the noise can be intricate and varied.

Deep learning models excel because of their ability to learn complex noise patterns directly from data. Unlike traditional methods that rely on predefined filters and mathematical models, deep learning algorithms can adapt to various types of noise, even those that are difficult to characterize mathematically. For instance, noise that arises from sensor imperfections or complex lighting interactions might not fit neatly into simple mathematical models. Deep learning models, by learning from vast amounts of data, can pick up on these subtle, hard-to-define noise characteristics and effectively remove them.

- Deep learning offers significant advantages over traditional denoising techniques. It preserves image details more effectively, avoiding the blurring often associated with conventional methods. Additionally, deep learning models can be trained to handle various noise types, making them more versatile in real-world applications.

- The effectiveness of deep learning models hinges on large datasets. These datasets allow the models to learn intricate relationships between noisy and clean images. The more diverse and extensive the training data, the better the model's ability to generalize and remove noise from unseen images. In this context, a "large dataset" can mean anything from tens of thousands to millions of image pairs, often used in research settings. For example, in medical imaging, a model trained on a vast collection of MRI scans can enhance image clarity, aiding in more accurate diagnoses. This is particularly useful in fields like retail, where high-quality product images are essential for online sales. Autoencoders, a type of deep learning architecture, can be used here to remove noise from product photos taken in less-than-ideal lighting, making them more appealing to customers.

Several deep learning architectures have proven effective for image denoising, each with unique strengths.

- Convolutional Neural Networks (CNNs) are particularly adept at capturing local features within images. Their architecture allows them to identify and suppress noise while preserving essential details. The convolutional layers are crucial for extracting relevant features, making CNNs a popular choice for denoising tasks. In denoising, these "relevant features" are patterns like edges, textures, and shapes that are part of the true image, allowing the model to differentiate them from random noise.

- Autoencoders learn compact representations of clean images. By training on noisy images and aiming to reconstruct clean versions, autoencoders can effectively separate noise from essential image features. They achieve this by compressing the image into a lower-dimensional latent space (the "compact representation"), which forces the autoencoder to learn the most salient features of the image while discarding noise.

- Generative Adversarial Networks (GANs) take a different approach by generating clean images from noisy inputs. GANs consist of two networks: a generator that creates images and a discriminator that evaluates their authenticity. This adversarial process leads to the generation of highly realistic and noise-free images.

Denoising real-world noisy images presents unique challenges. While deep learning models can be trained on synthetic noise, real-world noise is often more complex and less predictable.

- Synthetic noise models are valuable for training deep learning models, but they often fail to capture the full complexity of real-world noise. To address this, researchers often combine synthetic and real-world data to improve the robustness of denoising models. A common strategy is to take clean images and artificially add synthetic noise to create noisy-clean pairs for training. If real-world noisy images with corresponding clean versions are available, they are also used.

- Using diverse and representative datasets is crucial for training effective denoising models. These datasets should include a wide range of noise types and image content to ensure the model can generalize well to new, unseen images. As Eficient image denoising using deep learning: A brief survey explains, the continuous development and innovation of studies have provided more effective and accurate solutions for image denoising.

Now that we've explored the new era of deep learning for image denoising, let's delve into the practical aspects of real-world noise versus synthetic noise and how it impacts model training.

Understanding Deep Learning Architectures for Image Denoising

Deep learning architectures have revolutionized image denoising, but how do these complex models actually work? Let's explore some key architectures that are making waves in the photography world.

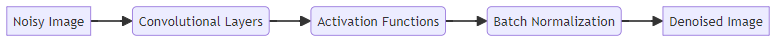

CNNs are a cornerstone of deep learning for image processing. They excel at capturing local features and patterns within images, making them ideal for identifying and suppressing noise while preserving essential details.

- Convolutional layers form the foundation of CNNs. These layers use filters to extract features from the image, such as edges, textures, and shapes. A 'filter' here is a small matrix of weights that slides over the image to detect specific patterns like edges or corners. By convolving these filters across the image, CNNs can learn to recognize complex patterns.

- Activation functions, like ReLU (Rectified Linear Unit), introduce non-linearity into the model. This allows the network to learn more complex relationships between pixels, enhancing its ability to distinguish noise from important image details.

- Batch normalization is a technique used to stabilize the learning process and speed up training. It normalizes the activations of each layer, reducing the internal covariate shift and allowing for higher learning rates. 'Internal covariate shift' refers to the change in the distribution of network activations due to the updates of parameters in the preceding layers during training. Reducing this shift helps the network learn more quickly and stably.

Two successful CNN-based denoising models are DnCNN and FFDNet. DnCNN uses residual learning and batch normalization to achieve state-of-the-art denoising performance. FFDNet, as mentioned in Image Denoising with Deep Learning, offers a fast and flexible solution for CNN-based image denoising. The linked article provides a general overview of deep learning for image denoising, and while it mentions these models, it doesn't delve deeply into their specific mechanics.

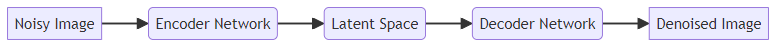

Autoencoders offer a different approach to image denoising by learning to compress and reconstruct images. This process allows them to separate noise from essential image features.

- Autoencoders consist of two main parts: an encoder and a decoder. The encoder compresses the input image into a lower-dimensional latent space, while the decoder reconstructs the image from this compressed representation.

- Autoencoders learn to compress and reconstruct images by minimizing the difference between the noisy input and the reconstructed clean output. This forces the network to learn a compact representation of the image, capturing the most important features while discarding noise.

- Denoising autoencoders are trained specifically to remove noise. They are trained on noisy images and aim to reconstruct clean versions, effectively learning to filter out the noise.

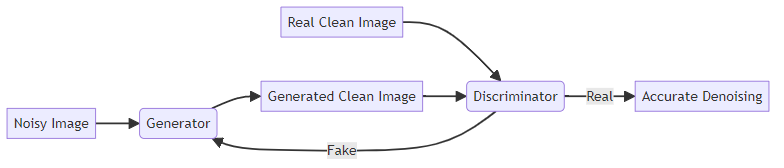

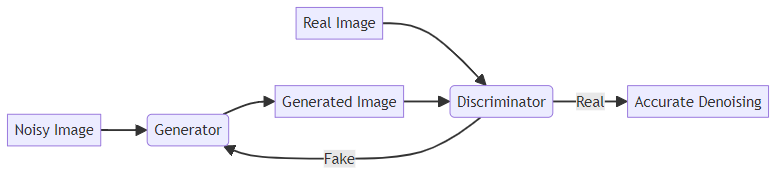

GANs (Generative Adversarial Networks) take a unique approach by generating clean images from noisy inputs using an adversarial process.

- GANs consist of two networks: a generator and a discriminator. The generator creates images from noisy inputs, while the discriminator evaluates their authenticity, distinguishing between real and generated images.

- GANs learn to generate realistic images through this adversarial process. The generator tries to fool the discriminator, while the discriminator tries to correctly identify the generated images. This constant competition leads to the generation of highly realistic and noise-free images.

- Training GANs can be challenging due to issues like mode collapse and instability. Mode collapse occurs when the generator produces a limited variety of images, failing to capture the full diversity of the data. Instability refers to oscillations in the training process, where the loss might fluctuate wildly or the model might fail to converge, often indicated by erratic changes in image quality during training.

Understanding these deep learning architectures is key to leveraging their power for image denoising. Next, we'll explore data preparation and augmentation techniques to further enhance denoising performance.

Practical Tips and Techniques for Deep Learning Image Denoising

Want to take your deep learning image denoising to the next level? Mastering data preparation, loss functions, and training techniques can significantly improve your results.

One of the most crucial steps is preparing your data.

- Diverse and representative training data is essential for robust denoising. Include images with various scenes, lighting conditions, and noise types to ensure your model generalizes well. For example, in astronomy, training data may include images of different galaxies and nebulae captured under various atmospheric conditions.

- Data augmentation can artificially increase the size of your dataset. Common techniques include random cropping, flipping, and rotation. These techniques help the model generalize better by exposing it to variations in image orientation, scale, and composition.

- Noise synthesis involves creating synthetic noise patterns to augment your training data. This can be especially useful when real-world noisy data is limited. Using synthetic noise helps the model learn to identify and remove specific types of noise more effectively. For instance, you might synthesize Gaussian noise by adding random values drawn from a normal distribution to your clean images, or Poisson noise by simulating photon counts.

Choosing the right loss function and evaluation metric is vital for guiding your model's learning process.

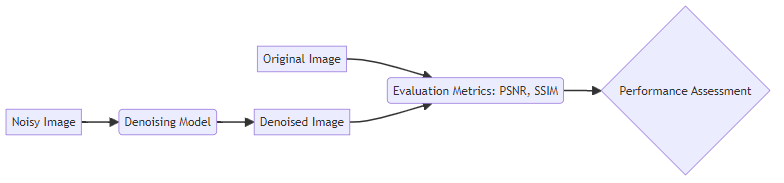

- Commonly used loss functions include Mean Squared Error (MSE). While PSNR and SSIM are primarily evaluation metrics used to quantify image quality after denoising, they can sometimes be incorporated into loss functions, especially in research settings, to directly optimize for these perceptual qualities.

- MSE measures the average squared difference between the pixels of the noisy and denoised images. PSNR (Peak Signal-to-Noise Ratio) quantifies the ratio between the maximum possible power of a signal and the power of corrupting noise, essentially measuring how much noise is present relative to the signal. SSIM (Structural Similarity Index) assesses image quality by comparing structural information, luminance, and contrast between two images, aiming to better reflect human perception of similarity.

- The best loss function for your specific denoising task depends on the type of noise and the desired outcome. For example, if preserving structural details is crucial, SSIM might be a better choice than MSE.

- Evaluation metrics such as PSNR and SSIM help quantify the performance of your denoising model. Higher PSNR and SSIM values generally indicate better image quality.

Effective training and fine-tuning are essential for achieving optimal denoising performance.

- Selecting the right model architecture is the first step. CNNs, autoencoders, and GANs each have their strengths and weaknesses, as discussed earlier.

- Hyperparameter tuning involves adjusting parameters like learning rate, batch size, and the number of epochs to optimize model performance.

- Transfer learning can significantly speed up training and improve results. Using pre-trained models as a starting point and fine-tuning them on your specific denoising task allows you to leverage knowledge gained from large datasets. Typically, models pre-trained on large image datasets like ImageNet are used. Fine-tuning involves retraining the later layers of the network on the specific denoising task, adapting the learned features to the new problem.

With a solid understanding of these practical tips, you're well-equipped to tackle the challenges of deep learning image denoising. Next, we'll explore real-world applications and case studies where these techniques shine.

Leveraging AI for Flawless Photos: Snapcorn's Suite of Tools

Want flawless photos effortlessly? Snapcorn's AI suite offers photographers background removal, image upscaling, colorization, and restoration—all simplified for stunning results. Snapcorn's tools likely leverage deep learning models, similar to those discussed, to analyze images and perform these complex tasks. For instance, their AI might use autoencoders or GANs to enhance image detail during upscaling or to intelligently reconstruct missing parts of an image during restoration.

The Future of Image Denoising: Emerging Trends and Research Directions

What if ai could anticipate and correct noise issues before you even press the shutter? The future of image denoising is rapidly evolving.

- New architectures are continuously emerging, pushing the boundaries of what's possible.

- Attention mechanisms are improving the focus on relevant image features, leading to better noise separation. These mechanisms allow the model to weigh the importance of different parts of the image when processing it, helping it focus on noisy areas or important details.

- Transformer networks, known for capturing long-range dependencies, are being adapted to handle complex image noise patterns. Unlike CNNs that focus on local regions, transformers can consider relationships between pixels that are far apart, which can be beneficial for understanding and removing global noise patterns.

Imagine training your camera to denoise images without needing perfect "clean" examples. Self-supervised learning is making this a reality.

- This technique allows models to learn from noisy images alone.

- Models are trained to denoise images without paired clean images. A common self-supervised approach involves tasks like predicting the noise itself from a noisy image, or using image transformations as a pretext task. For example, a model might be trained to reconstruct a corrupted version of a noisy image, forcing it to learn the underlying clean structure.

- The reduced reliance on labeled data means improved generalization, making it ideal for real-world scenarios.

What about those challenging low-light situations where noise is most prevalent? Future research is focusing on these specific conditions.

- Researchers are addressing the unique challenges of denoising images captured in low light. This often involves dealing with very low signal-to-noise ratios and specific noise characteristics that appear in such conditions.

- Developing models must be robust to various noise types and levels.

- Future algorithms will improve robustness and efficiency.

The continuous development and innovation of studies have provided more effective solutions for image denoising, as noted earlier.

Deep learning is poised to revolutionize image denoising further.