Revolutionizing Image Restoration: How CNNs are Transforming Photography

Understanding the Need for Image Restoration

Got a shoebox full of faded and damaged photos? Being able to restore images is super important for keeping memories alive, making product pictures look better, and just generally polishing up creative stuff across lots of different jobs.

Old photos, they get all sorts of damage, right?

- Physical damage like tears, scratches, and stains are common with older prints, making it hard to see the details.

- Digital degradation causes problems too; compression artifacts, low resolution, and colors fading mess with digital images.

- Environmental factors, like light, humidity, and just bad storage, also speed up how fast images decay.

The old ways of fixing images, they've got their limits.

- Manual retouching takes forever, needs skilled pros, and the results can be kinda subjective.

- Software-based methods are stuck with their algorithms, often add weird artifacts, and really struggle with complicated damage.

- ai-driven approaches offer automation, speed, and the ability to learn complex patterns from huge datasets for restoration that looks real.

Convolutional Neural Networks (CNNs), kinda inspired by how our eyes work, are leading these advances. A CNN learns features by optimizing filters, processing images layer by layer to spot patterns and fix details. According to BairesDev, CNNs are behind facial recognition, self-driving cars, and medical imaging. (How Convolutional Neural Networks Work for Image ...)

ai is changing how we deal with images in all sorts of ways.

- Enhancing old photos gives new life to memories we cherish, making them vibrant again.

- Improving product photography fixes and enhances images for e-commerce, making sure online shoppers get high-quality visuals.

- Streamlining post-processing saves time and resources with automated tools, making workflows more efficient.

As we move forward, understanding how CNNs are used in image restoration will open up new possibilities. The next section will get into the core ideas of CNNs and how they actually work.

Convolutional Neural Networks (CNNs): The Core Technology

Wondering what makes CNNs tick? Let's pull back the curtain and show you the insides of this tech that's really changing image restoration.

Convolutional Neural Networks (CNNs) are a special kind of artificial neural network built to handle data that's like a grid. Think of images, which are basically grids of pixels. CNNs get their ideas from the visual cortex in our brains, the part that handles visual stuff.

- CNNs are great at automatically learning features in layers from images. Instead of needing us to tell them what features to look for, CNNs learn them straight from the data. This makes them really adaptable for different image restoration jobs.

- A big plus for CNNs is their ability to spot patterns in different parts of an image. By learning hierarchical features, CNNs can understand complicated structures and how things relate in images. This is super important for jobs like getting rid of noise, filling in missing bits, and making image resolution better.

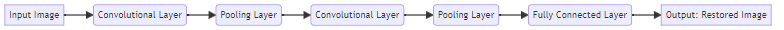

- CNNs are made up of several key layers, each doing its own thing. The most important layers are convolutional layers, pooling layers, and fully connected layers. These layers work together to pull out features, shrink down the data, and make final decisions.

We don't just see images as a bunch of pixels, right? We see patterns, edges, and shapes. CNNs try to do the same thing.

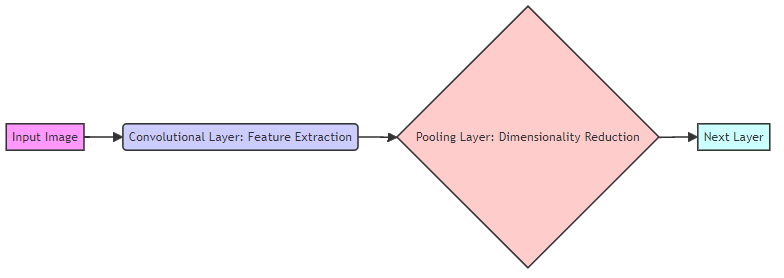

- Receptive fields in CNNs are like how neurons in our visual cortex react to specific parts of what we see. This is done by connecting each neuron in a convolutional layer to only a small, local area of the input. This localized processing is key because it lets the network build up an understanding of spatial relationships and patterns, starting with simple edges and building to more complex shapes.

- Convolutional layers are what pull features out of images. These layers use filters to find edges, textures, and shapes. Each filter goes over the whole image, making a feature map that shows where that specific feature is.

- Pooling layers shrink the data down while keeping the important features. This is done by downsampling the feature maps, which cuts down on the computing needed and makes the network more resilient to slight changes in the input. This downsampling helps achieve translation invariance, meaning the network can still recognize a feature even if it's shifted slightly in the image, making it more robust and better at generalizing.

Snapcorn has a bunch of ai-powered tools to easily make your images better. Transform your images with powerful ai tools - Remove backgrounds, upscale resolution, and more. It's free and you don't even need to sign up.

- Background Remover: Instantly separate subjects for awesome portraits or product shots.

- Image Upscaler: Make images bigger without losing detail, perfect for printing or big screens.

- Image Colorizer: Bring old black and white photos to life with colors that look real.

- Image Restoration: Fix damaged photos and bring back memories you love.

Now that we've looked at the main parts of CNNs, the next section will dive into how CNNs are actually used in image restoration.

CNN Architecture for Image Restoration

Did you know that how a CNN is built can really change how good a restored image looks? Let's check out the specific designs—like encoder-decoder networks and generative adversarial networks—that are really changing image restoration.

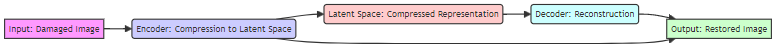

Remember how CNNs are inspired by our visual cortex? Encoder-decoder networks copy this by squishing and then rebuilding images.

- The encoder squishes the input image into a latent space, grabbing the most important info in a smaller representation. Think of it like making a super-efficient summary of the image. This compressed version is good at ignoring noise and stuff that doesn't matter.

- The decoder then rebuilds the image from this compressed info. It takes the summary and expands it back into a full image, filling in missing details and getting rid of noise.

- Skip connections are really important for keeping fine details. These connections link layers in the encoder straight to matching layers in the decoder, letting high-resolution features skip the compressed latent space. This is crucial because the latent space often compresses or loses fine details like sharp edges, intricate textures, and subtle patterns that are vital for a realistic reconstruction. By bypassing this compression, skip connections allow these details to be directly passed to the decoder, ensuring they're preserved and accurately reconstructed.

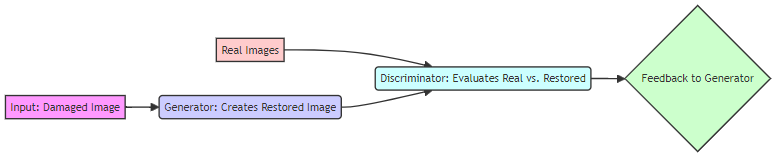

Ever wondered how ai can make images that look incredibly real? Generative Adversarial Networks (GANs) are key to making that happen.

- GANs have two neural networks: a generator and a discriminator. They work together in a back-and-forth process.

- The generator makes restored images from damaged inputs. It tries to create images that look just like real, high-quality photos. For image restoration, the generator might take a noisy image and try to generate a clean version, or it could take an image with a missing patch and try to fill it in realistically.

- The discriminator checks the restored images, telling the difference between real images and ones the network made. It's like a critic, pushing the generator to get better. In image restoration, the discriminator helps ensure that the generated details (like repaired scratches or filled-in areas) look natural and not just random pixels.

- This adversarial training process makes the restored images look more real and higher quality. The generator learns to make more realistic images, while the discriminator gets better at spotting fakes.

How do you make sure a CNN gets the best results possible? Loss functions guide the restoration process by measuring how different the restored image is from the original.

- Pixel-wise loss measures the difference between the pixels in the restored image and the pixels in the original image. This makes sure the restored image is really close to the original on a pixel-by-pixel basis.

- Perceptual loss focuses on high-level image features, aiming for good visual quality instead of just matching pixels exactly. This can lead to more visually pleasing results because it prioritizes features that humans notice as important, like edges and textures, rather than just raw pixel values. This approach helps prevent restorations that might look "blocky" or unnatural, even if they have low pixel-wise error.

- Adversarial loss pushes the generator in a GAN to make realistic images by penalizing it when the discriminator can easily tell its output apart from real images.

Understanding these CNN architectures and loss functions is pretty important for getting good at image restoration. The next section will look at how CNNs are actually used in this field.

Applications of CNNs in Image Restoration

Did you know CNNs can bring old family photos back to life? Let's see how these networks are used to improve and colorize images, making cherished memories feel new again.

CNNs are really changing how we restore old and damaged photos, tackling common problems like:

- Removing scratches, tears, and stains with amazing accuracy. CNNs are trained to spot these imperfections and fill them in, bringing back the original image's details. For example, a CNN might detect the linear pattern of a scratch and then generate plausible pixel data to fill that gap, making it look like the scratch was never there.

- Restoring faded colors and boosting contrast. Over time, colors fade and contrast drops, but CNNs can look at the image and bring back the original vibrancy.

- Colorizing black and white photos using ai to guess what the colors should be. This lets future generations see these pictures in a whole new way.

CNNs are really good at making images clearer and higher resolution, offering some key benefits:

- Increasing the resolution of low-resolution images without losing detail. CNNs can analyze the pixels that are there and create a higher-resolution version that stays sharp and clear.

- Reducing noise and artifacts in images taken in bad lighting. CNNs can spot and cut down on noise, making images cleaner and nicer to look at.

- Improving the clarity and sharpness of images for different uses. CNNs can make blurry or out-of-focus images sharper, making them better for printing, showing, or further analysis.

Having great visuals is super important for online businesses, and CNNs play a big part in making sure product images are up to par:

- Removing blemishes and imperfections from product photos. CNNs can automatically find and remove scratches, dust, or other flaws, making product visuals look perfect.

- Enhancing colors and details to make products look more appealing. CNNs can adjust colors, brightness, and contrast to create eye-catching product images that draw in shoppers.

- Creating high-quality images that boost sales. By making visuals look better and more accurate, CNNs help e-commerce businesses show off their products in the best possible light, leading to more customer interest and sales.

These ways CNNs are used in image restoration show just how versatile and powerful they are. The next section will get into the tools and software you can use to actually do this stuff.

Practical Considerations and Tools

Did you know that having the right tools and thinking about ethics can make or break your image restoration project? Let’s look at the practical side of using CNNs for photography, making sure you're ready to handle the future of image restoration responsibly.

Picking the right tools is really important for doing image restoration well. Whether you're a pro photographer or just doing it for fun, the right software and hardware can make a big difference in how good and how fast your work gets done.

- Software options go from big names like Adobe Photoshop to free ones like GIMP. For ai-enhanced restoration, special ai photo editors like Snapcorn offer automated solutions. Snapcorn's 'Image Restoration' feature, for example, likely uses CNN architectures like encoder-decoders or GANs to automatically detect and fix damage like scratches or fading.

- Hardware needs depend on how complicated the jobs are. A dedicated GPU (Graphics Processing Unit) can seriously speed things up, while enough RAM (at least 16GB) makes sure things run smoothly. You also need enough storage for big image files.

- Cloud-based services make things accessible and easy. These platforms let you work on any device with internet, but they usually have subscription fees.

Like any really powerful tech, ethical stuff is super important in image restoration. It's good to know about possible problems and try to use it responsibly.

- Over-restoration can make images look fake and lose their original charm. Try to find a balance between making things better and keeping them real, so the image stays true to its history.

- Bias in ai models is a big deal. ai models learn from datasets, and if those datasets are unbalanced, the ai might produce unfair or discriminatory results. For image restoration, this could mean biases related to skin tones (making them look unnatural or less detailed), historical accuracy (misrepresenting the past), or the types of damage the model is trained to fix (being better at fixing some types of damage than others).

- Transparency is key. Be honest about using ai for image restoration, especially when showing restored images as historical records. This keeps trust and lets people understand the context of the image.

The world of ai-powered image restoration is changing fast, promising cool new things soon. Keeping an eye on these trends will be important for staying ahead in photography.

- Expect more realistic and seamless restoration techniques that have fewer artifacts and look natural. ai will get even better at filling in missing bits and fixing flaws without leaving a digital trace.

- Putting ai into regular photo editing software will make advanced restoration tools easier for more people to use. Imagine having ai features just built into your favorite editing software.

- Personalized restoration, where ai learns your own style and preferences, will become more common. ai will learn your unique look and adjust the restoration process just for you.

As we wrap up this look, remember that CNNs are more than just tools; they're a new frontier for keeping and improving our visual history. By understanding what they can do, what they can't, and the ethics involved, you can use their power to create amazing and meaningful images.